Background

Screening for retinal diseases has become a top healthcare priority. Today, 220 million people are affected by retinal diseases and this number is estimated to grow to 434 million in 2030 due to the aging population and the epidemic nature of obesity. Therefore, sustainability of screening programs is not warranted, and 50% of subjects at risk are still not screened due to lack of specialists and poor coverage in rural areas.

Fortunately, retinal diseases are up to 80% preventable, if those at risk are screened periodically and receive timely treatment. Retinal fundus photography screening has been proven highly cost-effective is rapidly implemented worldwide. However, current programs use manual image examination by a highly-specialized workforce, which is extremely expensive. Considering that an examination by a medical specialist costs €130 to the health system, and that more than 90% of patients attending eye screening do not need treatment, the cost of handling healthy patients is enormous.

Aim

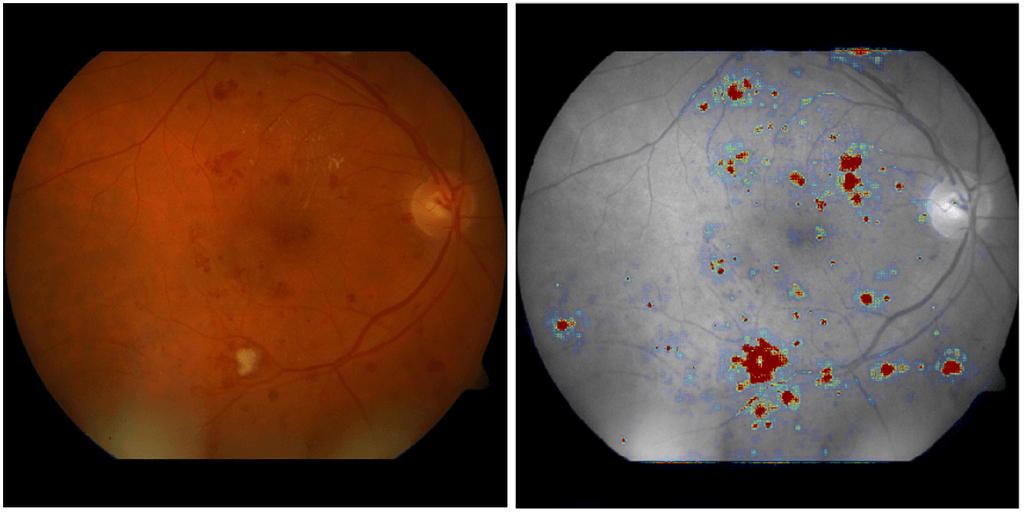

The goal of this project is to automate the screening workflow of eye diseases using innovative techniques based on artificial neural networks. The scientific challenge of this project is to develop dynamic learning strategies that allow deep learning systems to continuously learn new concepts and adapt to new data over time, in order to increase their robustness in changing clinical settings and maintain their accuracy through their lifetime. This will be done by:

- Allowing deep learning systems to provide interpretable predictions and communicate with human experts.

- Increasing specialization of deep learning systems over time.

- Dynamically modifying deep learning parameters and architecture based on human experts’ feedback.

Implementing dynamic deep learning methods in eye screening workflows will substantially reduce the burden on highly-trained personnel and reduce the associated costs, while maintaining the quality, obtaining higher throughput and increasing screening coverage.

Visual interpretability of automated screening in a color fundus image predicted as referable diabetic retinopathy.